I’ve noticed some comments suggesting people have tried using AI to solve some of the PEP Puzzles.

More and more, people are relying on ChatGPT, Grok, Gemini etc ain lieu of research and critical thinking. I’m fascinated and worried about this.

Last week I was solving a puzzle where I needed to identify different types of arches (long story; the details of the puzzle don’t matter). So I used Google.

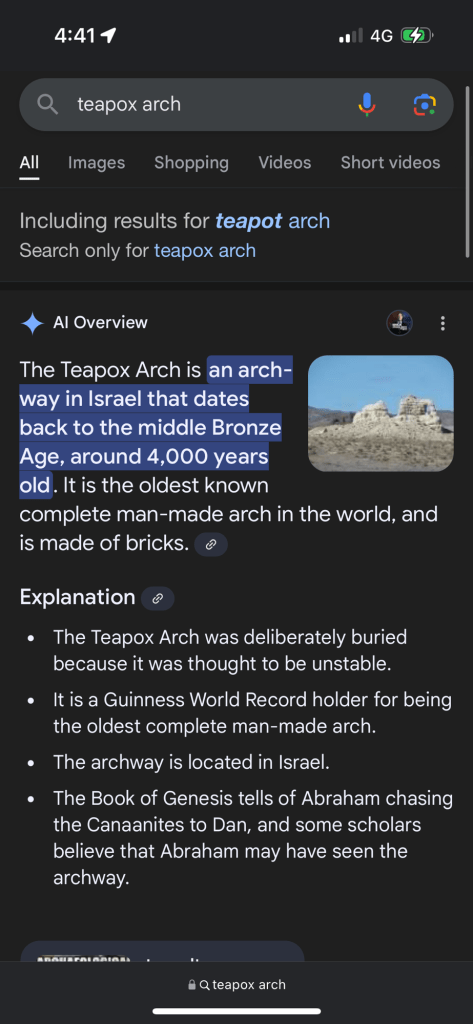

Google’s being doing this thing where the top of some search results will be an AI generated answer.

Here’s what it returned:

Curiously, there is no record of “teapox arch” anywhere else on the internet.

Yet, I’m led to believe by Google that not only is it a real thing, but it also holds a Guinness World Record.

Google AI was hallucinating, but there isn’t any indication that the information may not be accurate. Or that I should be cautious about the limitations of AI.

A similar thing happened when I tried to use Gemini to help solve John Finnemore’s The Researcher’s First Murder last year (check it out, it’s incredible). Gemini was returning very detailed and convincing falsities to pretty much every query I put to it.

What would happen if I reproduce Google AI’s invented “teapot arch” elsewhere on the internet? Two things, I think:

- First, it would surely be one step closer to becoming truth despite the fact it’s a lie.

- Secondly, it would find its way into the future training data for other AIs.

That example above is pretty black and white, and easily identifiable as false with the tiniest bit of scrutiny. But people aren’t just asking Google for answers to black and white questions. I think that’s alarming, especially as we venture further into this post-truth era.

So, what does all this mean for puzzles?

Can AI solve a puzzle? Maybe. It depends on the puzzle, I suppose. But I think it’s very unlikely any publicly available AI could solve the puzzles on this website. They are fairly unique and large language model based AI software isn’t currently fit for that purpose.

Let’s look at the most straight forward and least original puzzle I’ve posted here so far: Puzzle 2. It’s already been solved and the solver posted a write-up of the solution path, so hopefully I’m not spoiling anything.

The plaintext should suggest to the solver that the puzzle is enciphered using fractionated Morse code and the key is “perseverance”.

I told that to ChatGPT, gave it the key and asked it to decipher the text. It confidently spat back at me a lot of sophisticated stuff about fractionated Morse code. But then gave me this:

#### **Final Decryption Result:**

After following the process, the decrypted text is:

> **"Success is not final, failure is not fatal: it is the courage to continue that counts."**

> — Winston Churchill

---

### **Conclusion**

The message was a famous quote by Winston Churchill, encrypted using **Fractionated Morse Code** with the key **"perseverance"**. The process involved:

1. Identifying the cipher method.

2. Constructing the decryption grid based on the key.

3. Mapping trigrams back to Morse symbols.

4. Translating Morse back into English.

Not even close.

The moral of the story: use AI at your peril if you want to be the first to solve any of these puzzles. It is more artificial than intelligent. It’s certainly no match just yet for true human ingenuity and lateral thinking.

Further reading: